How to Develop a Bidirectional LSTM For Sequence Classification in Python with Keras - MachineLearningMastery.com

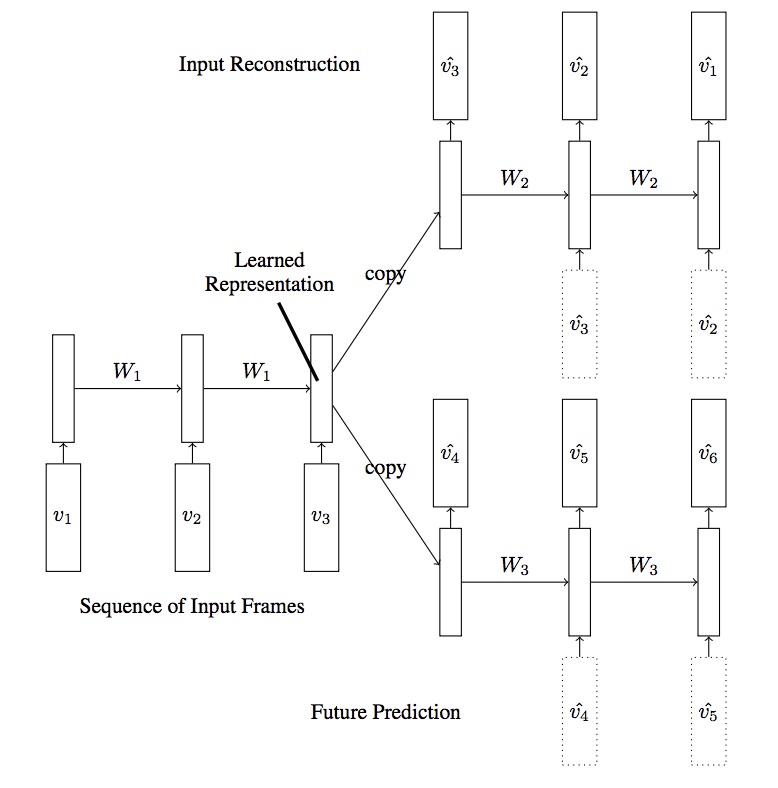

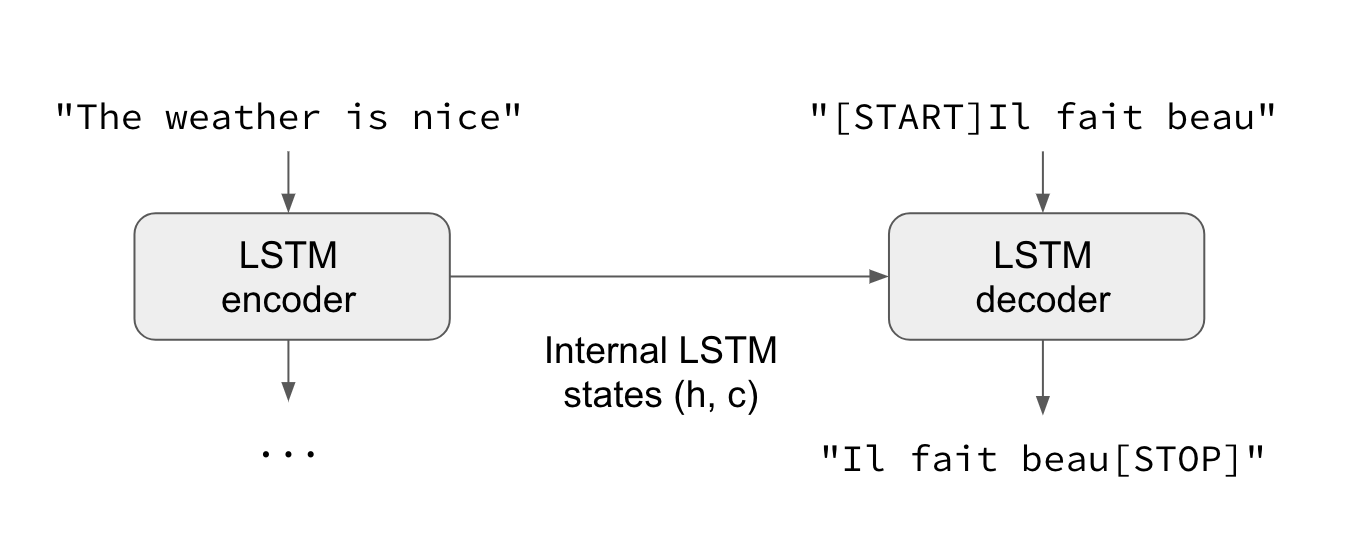

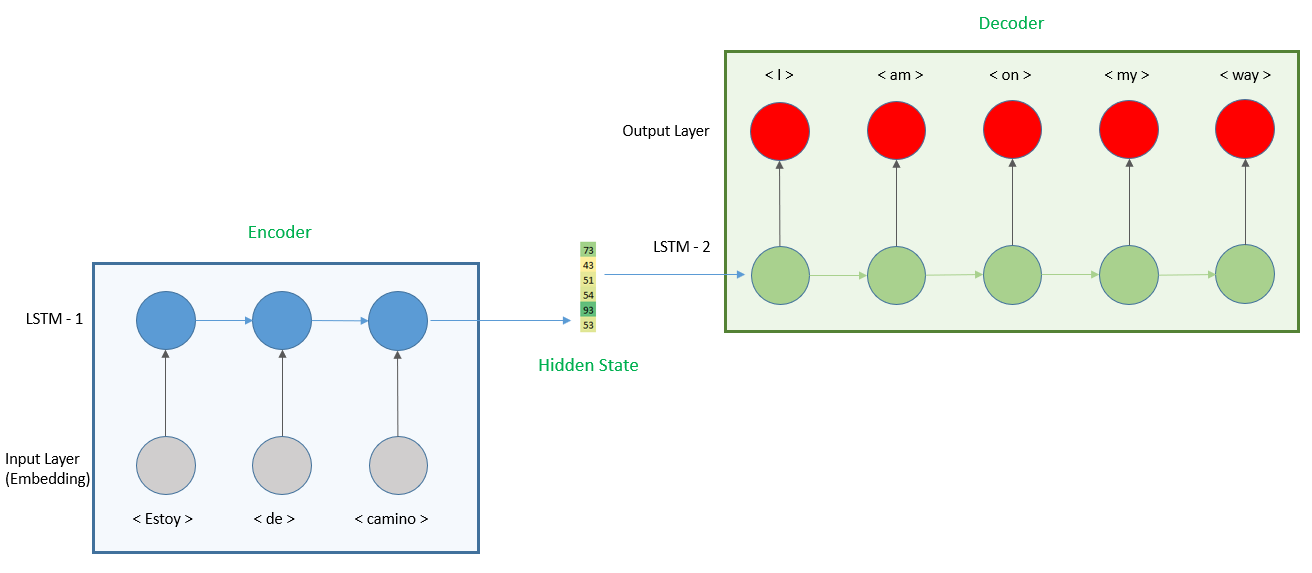

What is attention mechanism?. Evolution of the techniques to solve… | by Nechu BM | Towards Data Science

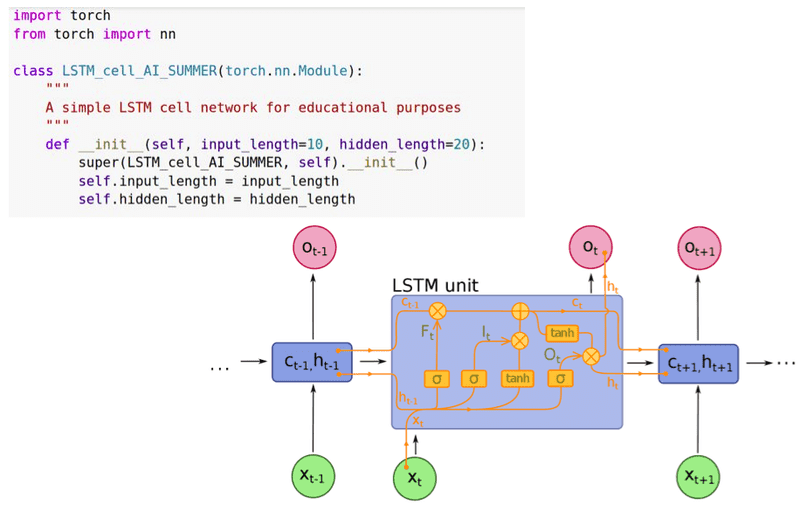

Dissecting The Role of Return_state and Return_seq Options in LSTM Based Sequence Models | by Suresh Pasumarthi | Medium

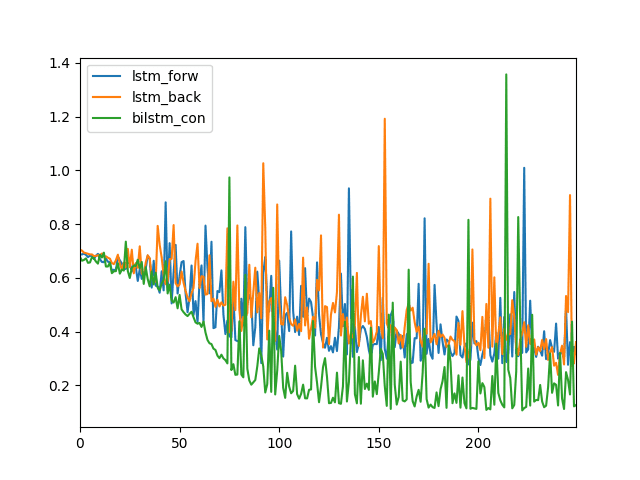

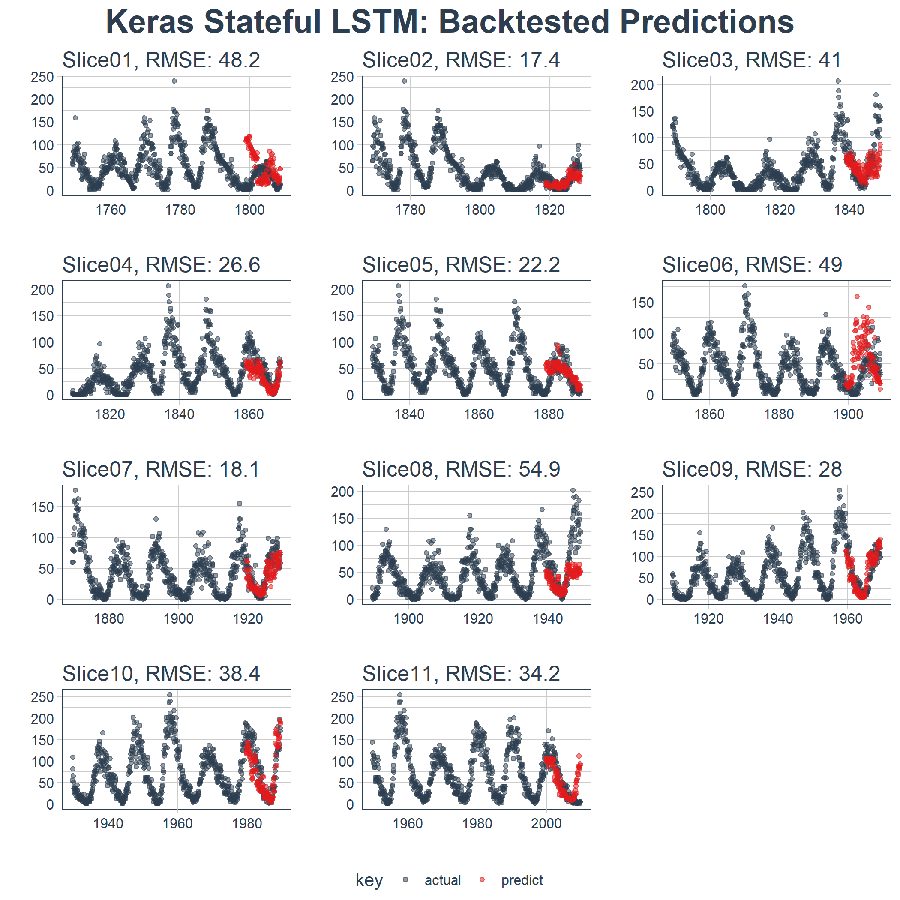

Fractal Fract | Free Full-Text | Forecasting Cryptocurrency Prices Using LSTM, GRU, and Bi-Directional LSTM: A Deep Learning Approach

tensorflow - why set return_sequences=True and stateful=True for tf.keras.layers.LSTM? - Stack Overflow

![Keras] Returning the hidden state in keras RNNs with return_state - Digital Thinking Keras] Returning the hidden state in keras RNNs with return_state - Digital Thinking](https://upload.wikimedia.org/wikipedia/commons/thumb/5/53/Peephole_Long_Short-Term_Memory.svg/2000px-Peephole_Long_Short-Term_Memory.svg.png)

![Anatomy of sequence-to-sequence for Machine Translation (Simple RNN, GRU, LSTM) [Code Included] Anatomy of sequence-to-sequence for Machine Translation (Simple RNN, GRU, LSTM) [Code Included]](https://media.licdn.com/dms/image/C4D12AQH-Ns14whJEjA/article-cover_image-shrink_600_2000/0/1585168458586?e=2147483647&v=beta&t=Svx_rxhPQ5ohPucYfuRJJeSpL26zbrxASMsrifeUGVA)