deep learning - Difference between sequence length and hidden size in LSTM - Artificial Intelligence Stack Exchange

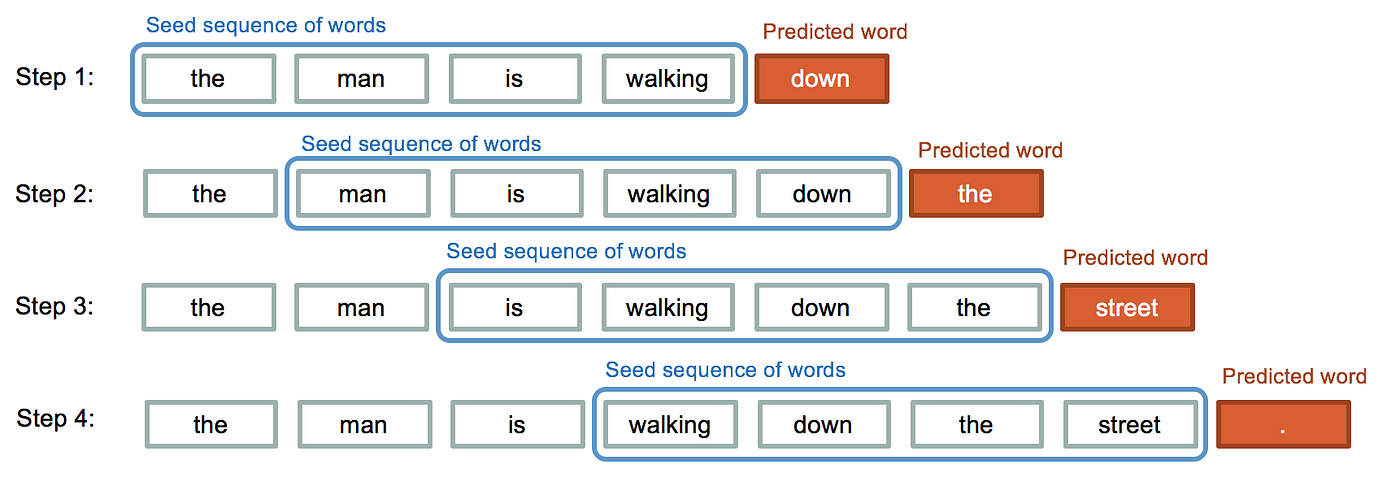

LSTM Recurrent Neural Networks — How to Teach a Network to Remember the Past | by Saul Dobilas | Towards Data Science

Mean square errors from different target sequence lengths (LSTM). All... | Download Scientific Diagram

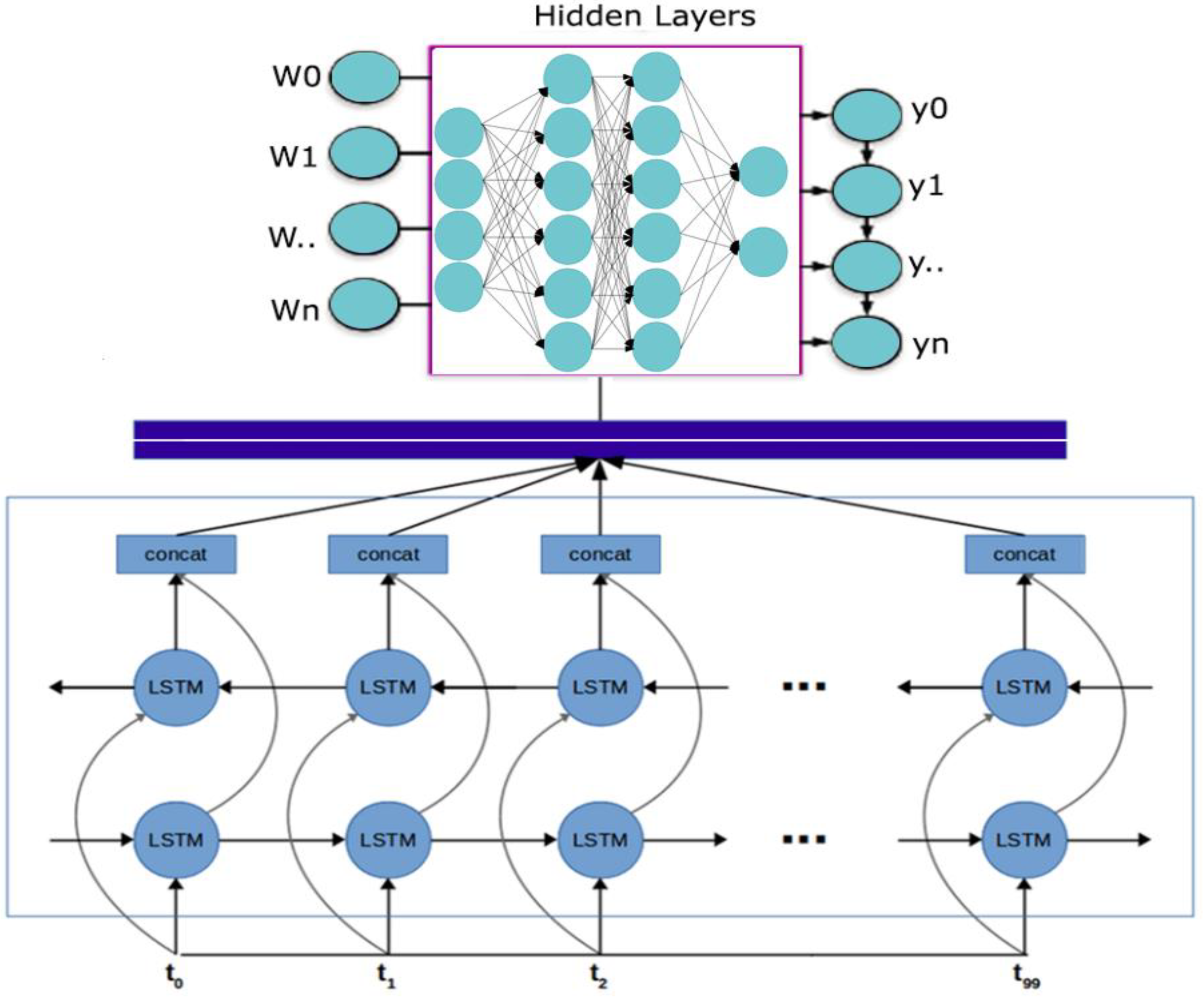

Applied Sciences | Free Full-Text | A Bidirectional LSTM-RNN and GRU Method to Exon Prediction Using Splice-Site Mapping

machine learning - How is batching normally performed for sequence data for an RNN/LSTM - Stack Overflow

Denoise Task with sequence length T = 200 on GORU, GRU, LSTM and EURNN.... | Download Scientific Diagram

Parenthesis tasks with total sequence length T = 200 on GORU, GRU, LSTM... | Download Scientific Diagram

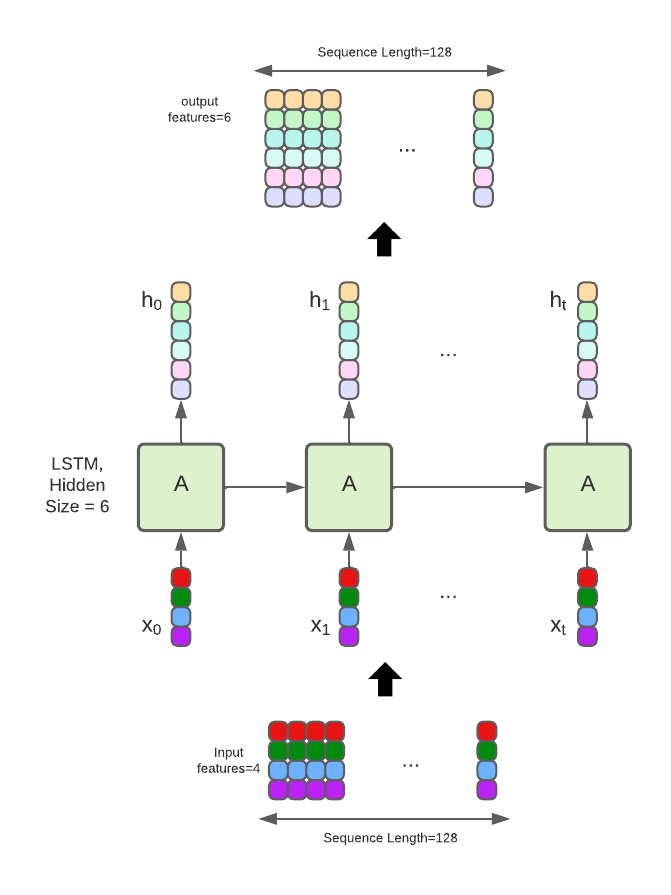

deep learning - Difference between sequence length and hidden size in LSTM - Artificial Intelligence Stack Exchange

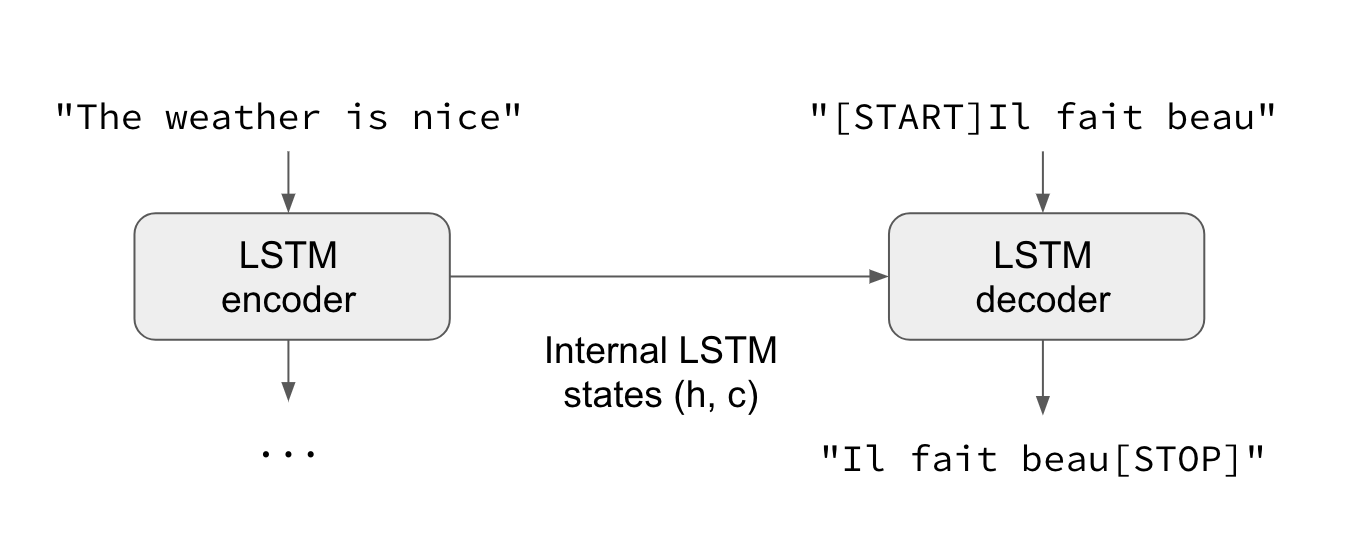

![Anatomy of sequence-to-sequence for Machine Translation (Simple RNN, GRU, LSTM) [Code Included] Anatomy of sequence-to-sequence for Machine Translation (Simple RNN, GRU, LSTM) [Code Included]](https://media.licdn.com/dms/image/C4D12AQH-Ns14whJEjA/article-cover_image-shrink_600_2000/0/1585168458586?e=2147483647&v=beta&t=Svx_rxhPQ5ohPucYfuRJJeSpL26zbrxASMsrifeUGVA)